By Edith Anna Ehrenbrandtner and Nada Gamal

It is no longer a secret that information is power: who detains information and knows how to manoeuvre it detains the power to either build or dismantle, according to its use. Recently, we have stumbled upon the word ‘information’ most often linked to two worrying prefixes: mis- and dis-information.

Although it is not an emerging trend, what captures most the attention of politicians and policymakers is the second phenomenon. But why? Disinformation is defined by the European Commission as “false or misleading content that is spread with an intention to deceive or secure economic or political gain and which may cause public harm”.

In the era of Internet, social media platforms have become the new fora for news supply and consumption as well as for pluralistic democratic debates. However, the web’s open space, in the name of the right to freedom of expression, also gives room to malicious cyber actors. Both state and non-state actors seek intentionally to spread distorted or outright false information against variegated targets, with a range of different motivations (often political or economic), through sophisticated tactics of manipulation.

Indeed, what is ‘new’ is the scale and speed of its diffusion as well as the ability to influence directly the public opinion. As Arturo di Corinto puts it, “Disinformation strategies are based on the manipulation of perceptions”; and the impact of these campaigns is outrageous. Covid-19 and vaccines are only the latest cases of the incumbent threat to our democratic values and human rights.

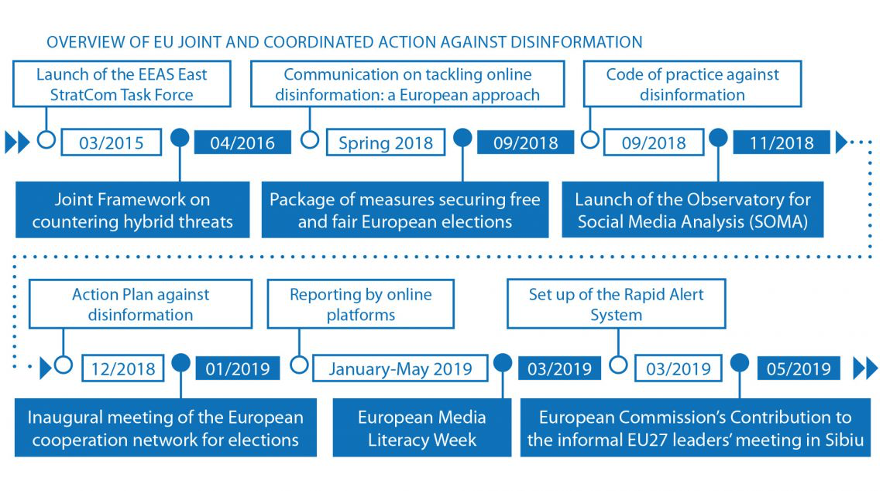

Disinformation, both as a new hybrid threat and an industrial scale problem, caught the EU’s concern. The latter developed in 2018 a broad set of concrete measures to counter its proliferation, although actions started in 2015 with the launch of the EEAS East StartCom TaskForce to address Russia’s ongoing disinformation campaigns by exposing its tools, techniques and intentions. The EU adopted an approach that gathers the expertise of all relevant stakeholders into one table (public and private sectors alongside civil society): the so-called ‘multidisciplinary’ approach.

Quite unsurprisingly, social media providers are core pillars of the Union’s strategy against disinformation. Since they are the gatekeepers of online data, they are considered strictly liable for creating a healthy media environment. An international survey revealed that, during the pandemic, Facebook has been (and virtually still is) the greatest source of disinformation relating to Covid-19.

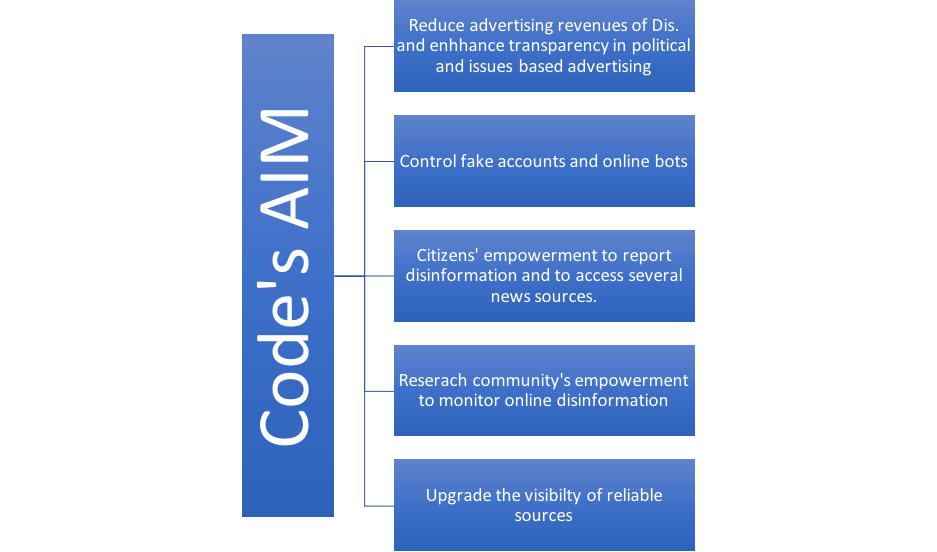

As a consequence, a set of plans has been launched by Brussels to address the disinformation threat, leveraging the crucial role of providers. A milestone document against disinformation, the Code of Practice of Disinformation, is the output of a voluntary agreement between platforms, leading social networks advertises and the advertising industry. The code, signed in October 2018, designs a set of international self-regulatory standards to limit the spread of disinformation. To date, there are 16 signatories.

In order to assess levels of these actors’ transparency and accountability in complying with their commitments to counter disinformation, the Commission carries out targeted monitoring and asks them for the submission of periodic reports on actions taken and their effectiveness.

Depicted as such, the code appears very ambitious. Indeed, both Facebook and Twitter signal that more than 317,000 accounts and pages have been removed by both platforms between 2019 and 2020. Progress is evident in the reports submitted by both providers as well.

However, evidence also demonstrates that there was an exponential increase in the number of countries using social media for computational propaganda and disinformation in 2020. The same is true for the number of private firms running manipulation campaigns. Why is that?

- First, the code is drafted by those who sign it and this explains the unequal, loose obligations incumbent on these platforms.

- Second, the code does not take into consideration cases where the very private actors are the source of disinformation: what kind of legal actions could be adopted? We should bear in mind that private and public sectors have different interests: what if the incentive structures of the two sectors will not coincide?

- Third, the code lacks a well-defined monitoring mechanism. Indeed, a deeper analysis of the Commission’s website revealed an irregularity in the submission/publication of the above-mentioned periodic reports (the case of TikTok is thought-provoking), apart from the Covid-19 period.

- Fourth, it remains a document adopted on a voluntary basis, therefore lacking any legal or even political value. It follows that a rigid legal and regulatory framework to counter disinformation is still missing.

In December 2020, the Commission released the European Democracy Action Plan, seeking to reinforce the bloc’s policy framework and measures in addressing disinformation, among other objectives. The plan aims mainly to enhance the Code of Practice on Disinformation through the establishment of “a co-regulatory framework of obligations and accountability of online platforms”, in line with the upcoming Digital Services Act.

A major focus will be given to technical aspects, like advertising and algorithmic processes as well as the flow of content. This time the role of the Commission is more evident: in spring 2021, it is said to release a guidance to enhance the Code of Practice and to set up a “more robust framework for monitoring its implementation, awareness raising tools, removing posts containing disinformation”.

The Action Plan is expected to be implemented gradually till 2023, ahead of the next European elections. Is that enough to fight data weaponisation and to design the future governance of our information landscape?

An overview of the policies of main social media providers against disinformation reveals the strategy’s current state of implementation. However, to which extent are the terms of service of the signatories enforced equally and fairly? Surprisingly, the Action Plan refers explicitly to “very large” online platforms. Still, these platforms are not subject to the same national rules and professional standards, and very limited actions have been adopted to fill this gap.

Moreover, as GLOBSEC’s latest report highlights, implementing responses to shared threats are still running quite low. Disinformation is a constantly evolving threat, and contrasting it therefore requires an updated system of information sharing. However, this only occurs in times of elections or Covid-19. The presented strategy is not exhaustive; for instance, it does not mention the concept of “deep fake” and related customised measures.